2. Connect to Azure SQL

Destinations

Ingested data is written to a destination store from where it is consumed for analysis and reporting. DataStori writes data to the customer's:

- Cloud storage (AWS S3, Azure Blob or GCS) and

- (optional) to a SQL database selected by the customer.

This article explains how to write data to Azure Blob and Azure SQL.

Create a New Destination Connection

As part of the DataStori onboarding process, we often help users set up their cloud storage. This is an optional service.

The storage location can be specified while configuring pipelines, and doesn't need to be defined at any earlier stage of the setup.

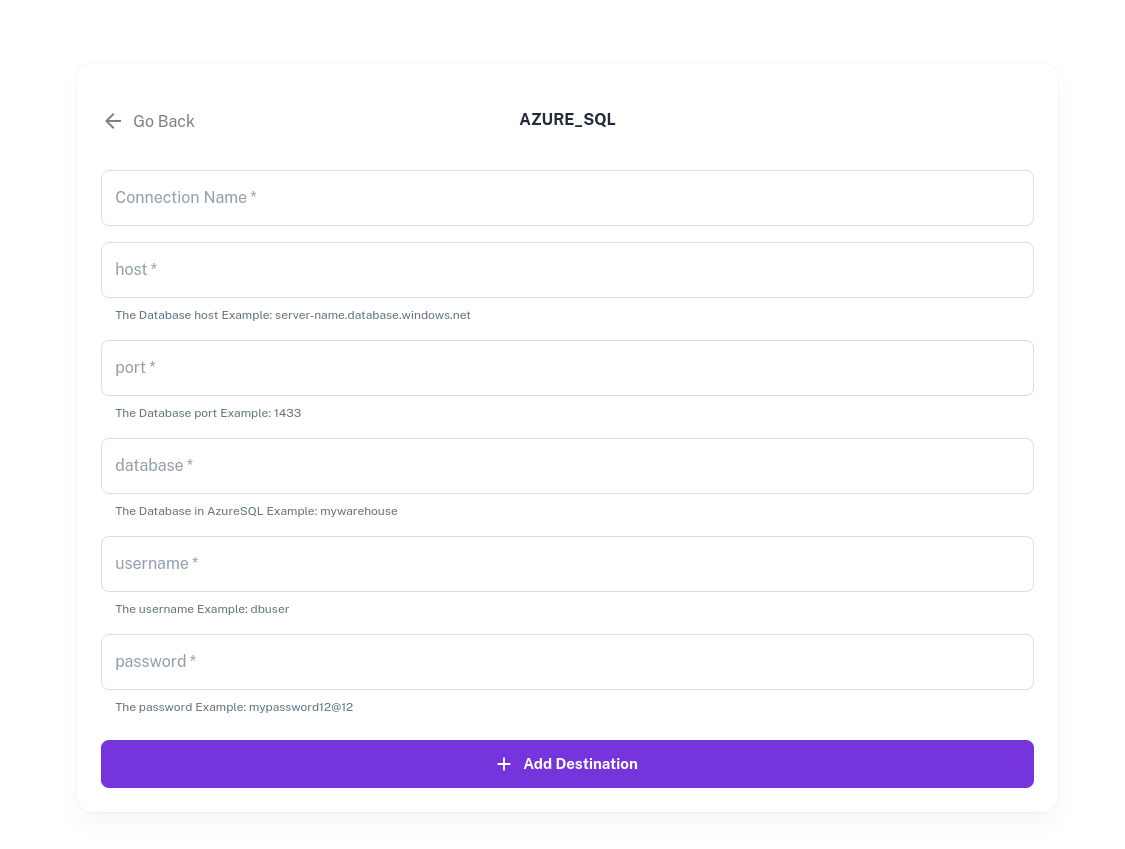

In the Destinations Tab in DataStori, click on '+ Add New Destination' and select 'Azure SQL'.

Fill and submit the connection form. In this example, we name the new destination as 'Reporting DB'.

Users can keep their reporting database private, with no access to any public IPs. Since the pipeline execution is within their cloud, the connection between pipeline code and database can take place over a private network.

The credentials entered by users are encrypted and stored in DataStori. They are not verified at this stage, but when the pipelines run in the customers' environment.

Data Policies and Privacy

Since all ingested data is stored in the customer's cloud environment, it can be governed and audited according to their data policies.